The Network Law Review is pleased to present a symposium entitled “Dynamics of Generative AI,” where lawyers, economists, computer scientists, and social scientists gather their knowledge around a central question: what will define the future of AI ecosystems? To bring all this expertise together, a conference co-hosted by the Weizenbaum Institute and the Amsterdam Law & Technology Institute will be held on March 22, 2024. Be sure to register in order to receive the recording.

This contribution is signed by Anouk van der Veer (Ph.D. candidate at the European University Institute) & Friso Bostoen (Assistant Professor at Tilburg University). The entire symposium is edited by Thibault Schrepel (Vrije Universiteit Amsterdam) and Volker Stocker (Weizenbaum Institute).

***

The use of generative AI (GenAI) exploded by the end of 2022. Now, just one year later, EU lawmakers are already calling for the regulation of competition (or the lack of it) in the GenAI market. In this short article, we take a closer look at the regulatory debate. We start with a snapshot of the GenAI industry as it exists at the end of 2023 (Section 1). Then, we consider two regulatory paths: one of intervention, the other of non-intervention, examining the arguments pro and contra each approach (Section 2) before concluding (Section 3).

1. A Snapshot of the Generative AI Industry

Any debate on regulation (and, indeed, non-regulation) should start from a solid understanding of the industry. With a recent and quickly evolving technology like GenAI, that is a challenge. Nevertheless, this section aims to provide a snapshot of the GenAI industry and its competitive dynamics.

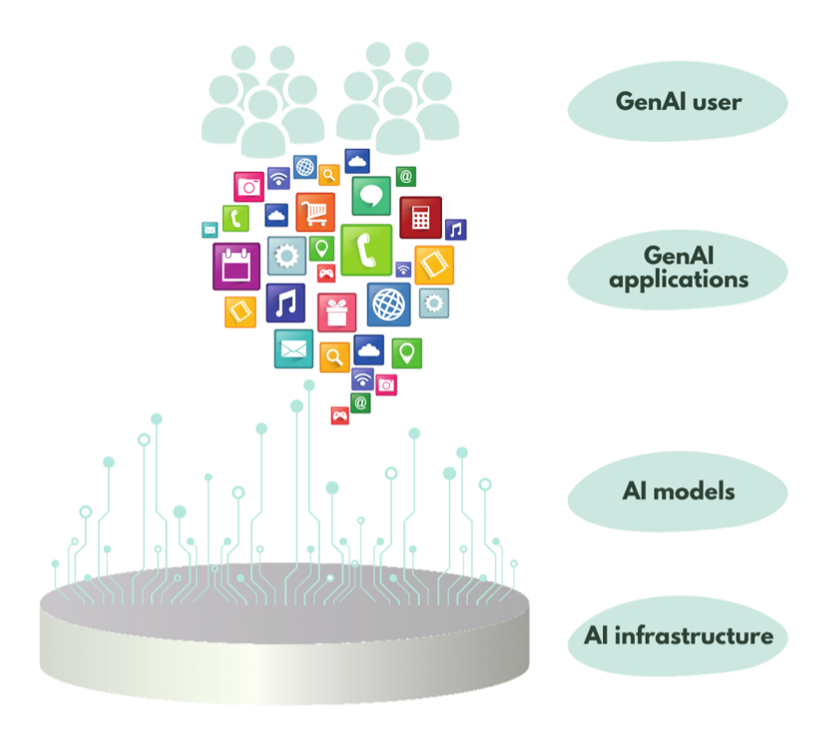

The competitive landscape of GenAI can be simplified into four layers: infrastructure, models, applications and users.[1]

- The essential infrastructure is provided by compute and data service providers. Compute providers include cloud services and producers of specialised microchips, while data services providers allow for the creation, collection, and preparation of data. All of these inputs are then used to train AI models.

- Relying on that infrastructure, developers build ‘foundation models’.[2] Foundation models are pre-trained on extensive datasets. As the name implies, the models provide a foundation for others to build on, g., by fine-tuning them using additional training data. LLMs represent a specific type of foundation model that is trained to generate text, code, images, video, and audio. The models are underpinned by a technological leap known as the ‘transformer’.[3]

- The third layer consists of applications that serve as the bridge between models and users. It is, in other words, the way in which GenAI is deployed. Applications can be fully dedicated to providing GenAI functionality, or they can simply include a GenAI feature.

- The final layer consists of the end-users. Both firms and individuals use the applications to improve efficiency, accuracy, or creativity—this, in turn, improves the model/application.[4]

For an example, let us look at the quintessential GenAI company, OpenAI. Its latest model is called GPT-4. This model was trained using compute provided by Microsoft’s Azure cloud infrastructure, which is, in turn, powered by NVIDIA’s microchips (in particular, its graphics processing units or GPUs).[5] The training data was collected from the open internet, licensed from third parties, and provided by users.[6] Developers can build applications on GPT-4’s foundation using application programming interfaces (APIs) made available by OpenAI.[7] At the same time, OpenAI also has its own consumer-facing application: ChatGPT.

Figure 1. GenAI layers

As shown by the OpenAI example, GenAI providers tend to be active throughout the different layers. More specifically, the industry has organized itself into ecosystems, the ‘dominant organizational form of the digital age’.[8] An ecosystem is a strategic management concept, referring to ‘a group of interacting firms that depend on each other’s activities’, usually for the purpose of innovation.[9] As is typically the case in ecosystems, the orchestrators partner with complementors in some layers, while integrating into others, resulting in a complex web of relationships. For example, OpenAI benefits from Microsoft’s compute infrastructure via a $13B investment;[10] in turn, Microsoft relies on GPT-4 to add AI features to its various applications (e.g., GitHub and Bing). Microsoft is also Meta’s preferred partner for its Llama-2 model.[11] Separately, Meta is the driver of a larger AI alliance.[12] Google is integrated along the entire chain with its Google Cloud, the Gemini model by DeepMind, and chatbot Bard (along with a variety of other apps with GenAI features). At the same time, Google also puts its cloud infrastructure at the disposal of other GenAI models, e.g., via its $2B investment in Anthropic. Amazon, the third key cloud service provider in addition to Microsoft and Google, also invested in Anthropic (to the tune of $4B).[13]

The emergence of ecosystems in the industry is unsurprising. For one, building GenAI models requires immense resources. While startups like OpenAI and Anthropic are capable of building cutting-edge models, they cannot do so alone. They need customized compute resources,[14] which they get access to via partnerships with one of the three main cloud providers (Microsoft, Amazon, and Google). In turn, established players are happy to rely on innovative newcomers to revamp their services with GenAI features (e.g., Microsoft’s Office suite). The dynamic is thus one of ‘co-opetition’,[15] a combination of competition and cooperation that plays out in every layer of the GenAI value chain.

While cooperation tends to be antithetical to competition, competition does not seem to suffer in GenAI, with every day bringing new highlights. Yesterday, GPT-4 was wearing the crown; today, Google is claiming its Gemini model outperformed it on most measures.[16] What we are observing is a process of dynamic competition. Dynamic competition starts with an innovative product with different characteristics that challenges existing products. When the product is introduced to the market, competitors respond, so the innovation initiates a new round of competition. For example, GenAI technology rekindled competition between search engines—a market long considered dormant. In turn, such application competition initiates competition in the model layer, where incumbents improve their own models while funding those of third parties.

2. At the Crossroads: to Intervene Or Not to Intervene?

While competition in GenAI may look healthy right now, policymakers are exploring potential regulation. So far, efforts have mostly focused on information-gathering. For example, the UK Competition & Markets Authority (CMA) and the Portuguese competition authority have each published a helpful report on the industry, and the European Commission launched a call for contributions.[17] These efforts are aimed at understanding the key competitive dynamics of the industry.

In the EU, lawmakers are already calling for more heavy-handed intervention.[18] Ex post competition law applies horizontally, including to GenAI, though we have seen no abuse of dominance investigations yet. In prodding the Commission to investigate how GenAI might be captured by the Digital Markets Act, lawmakers suggest going a step further, regulating competition ex ante. In this section, we survey arguments for and against intervention.

2.1. Arguments For Intervention

The argument for intervention is a precautionary one: ‘Sure, competition seems fierce today, but what about tomorrow?’ In fact, policymakers may feel like they have been here before. Indeed, GenAI may be another ‘platform shift’ after the iPhone heralded the last one around 2007, at least if you believe Microsoft CEO Satya Nadella.[19] But what happens during platform shifts? A period of intense competition is followed by a lack of it. The fierce initial competition—as between Apple, Google, Microsoft and many others in mobile operating systems—is won by one or two players.

The problem is not just a lack of players that competitively constrain each other in the market. If the players are diverse enough and continue to innovate, you may not need that many. But as competition lawyers know very well, market power changes things. Take mobile again: a closed iOS facing off against an open Google Android leaves users a choice of ecosystem. But what if the open ecosystem then closes up, as with Google Android?[20] Currently, the existence of open foundation models, particularly Facebook’s Llama, is seen as keeping the market competitive. It means that smaller firms do not have to build their own model, of which the cost could be prohibitive, but can instead get to work with an open-source one. But what if those open models disappear?[21]

You could even question whether there is a platform shift at all. If GenAI is a disruptive innovation, it does not seem to disrupt any incumbent market positions. Those players that ascended during the previous platform shift—like Google, Amazon, and Facebook—seem to come out on top in this one as well.[22] OpenAI is more of a startup, but it is so intimately connected to Microsoft that the CMA is trying to ascertain whether the partnership is actually not a merger.[23] Whatever the case may be, as of yet, we are not seeing the displacement typical of disruptive innovation.[24] We are seeing meaningful product improvements that can be better qualified as sustaining innovation.[25] While regulation carries the risk of inhibiting market disruption, this risk is mitigated in case of sustaining innovation. In that context, regulation can level the playing field and perhaps even promote disruptive innovation; allowing smaller players to challenge incumbents.

Of course, competition law applies in any case, so it is not so much a question of regulation but rather of enforcement. But you might want to start applying competition law early. Again, during the last platform shift, this did not happen. While Google became the dominant search engine in the early 2000s, investigations were not started until 2010, with the first decision coming down in 2017.[26] The problem is that, by that point, abusive conduct can have cemented the platform’s dominant position or created such a position in an adjacent market. And due to network and related (e.g., learning) effects, it then becomes very hard to dislodge the dominant position. Remedies are likely to be ineffective.[27] So, better to prevent than to cure.

The anticompetitive conduct that could take place is easy to imagine. Given that data and especially compute are essential to train models, input foreclosure at the infrastructure level is a risk. Given that models function as innovation platforms, changes to its APIs can spell doom for startups relying on it. Developers got a hint of this when OpenAI integrated new functionality into ChatGPT, which promptly made their startups redundant.[28] The question is how far one wants to go in regulating such conduct. On a case-by-case basis under competition law, or ex ante with the DMA?

At present, GenAI chatbots do not fit neatly in the DMA’s list of core platform services or CPSs (are they search engines? virtual assistants? social networks?).[29] Would it be worthwhile to pursue the changes some lawmakers are proposing and bring GenAI under the DMA’s regulatory framework? While such regulation, and the compliance costs and processes it comes with, is not known to be a good fit for dynamic markets, it is important to note that it is asymmetrical (in contrast to the AI Act): it only applies to the very largest players, so startups will not be encumbered by it—indeed, it might create the space for them to thrive.

This is the argument for intervention, at least the short version. It is driven, in no small part, by the feeling that lawmakers and competition authorities ‘missed the boat’ when the last platform shift came around, and do not want to make that mistake twice. Learning from mistakes should be applauded, but there is such a thing as regulating too early, or in a setting that does not call for it. The next section sets out that argument.

2.2. Arguments Against Intervention

When observing the booming GenAI industry fearing that history may repeat itself, that fear obscures the alternative narrative of dynamic competition. Market positions built on technology are vulnerable as the next-generation technology revitalises competition, forcing incumbents to innovate at the risk of losing their market position.[30]

As described above, early intervention in the GenAI industry aims to ensure competition as the market matures. But intervening in an emerging industry risks doing the exact opposite, i.e., stifling innovation and competition. This risk is particularly tangible in high-technology industries where the object of regulation is not yet well understood and market structures are still evolving. The recent reports on GenAI indicate that competition authorities are indeed still familiarizing themselves with the technology and its implications. Even the DMA acknowledges the risk of early intervention: before being designated a gatekeeper, a firm must enjoy an ‘entrenched and durable position’, which means its CPS must have had 45 million users in the EU for each of the last three years.[31] The main GenAI services have barely existed for three years (let alone boast 45 million users). Only OpenAI’s DALL-E turned three in January 2024. ChatGPT has two more years to mature, and new applications are just starting their three-year countdown.

In an ongoing complex process of dynamic competition, decisions to intervene through competition enforcement or ex ante regulation should not be taken lightly. Recall that dynamic competition involves innovative (and differentiated) products challenging existing products, inviting competitors to respond, which initiates a new round of competition. Intervening in the competition that produces the innovative (and differentiated) product comes with risks, but that intervention also bears risks for the competition initiated by the innovation. In other words, intervention risks harming competition at two levels: the competition driving generative AI and the competition driven by generative AI.[32]

The well-known risks of intervention include firms becoming more risk-averse and spending resources on compliance rather than innovation. This could disproportionally hit smaller firms, who cannot afford to both comply with complex rules and invest in new technologies,[33] reducing the competitive pressure they exert.[34] This is especially problematic in light of the trend towards smaller GenAI models, which opens the door for more nimble competitors.[35] Studies show that small models that rely on less trainable parameters can perform similarly or even better.[36] For example, DeepMind’s Chinchilla[37] and Meta’s Llama[38] outperform GPT-3 with fewer parameters.[39] Llama was, in turn, outperformed by Microsoft’s Phi-2 with less than half of the parameters.[40] At a price of less than $600, Stanford researchers developed Alpaca with a similar performance to GPT-3.5.[41] Google acknowledged such a competitive threat in a leaked memo: whereas Google needs months, $10M and 540B parameters, these competitors need only weeks, $100 and 13B parameters to achieve the same.[42]

Competition within the AI model level impacts the competition in other layers of the ecosystem. As described in Section I, the AI industry is organised in an ecosystem, consisting of infrastructure providers, the model and application developers, and the end-users. Even in a concentrated infrastructure level, fierce competition between AI models fuels competition at that infrastructure level. AI infrastructure providers aim to host as many (and cutting-edge) models as possible, competing on elements ranging from performance, such as processing power, to ease of use. Providers thus obtain access to technologies that can be incorporated into their offerings (see the Microsoft–OpenAI example above). Competing infrastructure providers respond by aligning with other developers, such as Google with Anthropic, which is now being poked by Amazon with investments.

While holding off on intervention to preserve the process of dynamic competition, authorities should further familiarize themselves with the new technology and monitor industry development closely. The UK, committed to playing a leading role in AI, announced a task force for that purpose.[43] The industry shows a willingness to cooperate. Last May-June, OpenAI CEO Sam Altman travelled the world to engage with policymakers. And Anthropic, Google, OpenAI, and Microsoft launched the ‘Frontier Model Forum’, aimed at promoting the responsible development of frontier models.[44] Of course, a cynic might consider this ‘outreach’ an effort of early-stage market leaders to capture policymakers with the goal of setting the current industry structure in stone. Nevertheless, the idea is that authorities—perhaps in the form of a European task force—can get acquainted with the technology and closely monitor whether the industry matures into a competitive one or not. The remaining regulatory question is: if not now, when?

3. Conclusion

We are still at the infancy of GenAI and it remains uncertain what teething problems may arise. In this short article, we set out arguments pro and contra regulation (in rather binary fashion). At present, we believe the focus should be on letting the process of dynamic competition play out. That does not mean there is no role for regulation at all. If typical competition problems (e.g., input foreclosure) arise, authorities should enforce the law. Authorities are doing well to study the sector so that they can intervene swiftly when that time comes. But it appears premature to start applying more intrusive regulation such as the Digital Markets Act. That instrument was itself based on extensive enforcement experience, which is lacking in the field of GenAI. And while there is a thing such as regulating too late, at present, the mistake would be one of regulating too early.

***

Citation: Anouk van der Veer and Friso Bostoen, Two Views on Regulating Competition in Generative AI, Dynamics of Generative AI (ed. Thibault Schrepel & Volker Stocker), Network Law Review, Winter 2024.

Footnotes

- [1] See Gopal Srinivasan and others, ‘A New Frontier in Artificial Intelligence: Implications of Generative AI for Businesses’ (Deloitte AI Institute 2023) 5; Thomas Hoppner and Luke Streatfeild, ‘What Competition Lawyers Need to Know about chatGPT and the Rise of AI’ (Hausfeld Competition Bulletin, 15 November 2023) 4–5.

- [2] The term was coined by Stanford University’s Center for Research on Foundation Models, see <https://crfm.sta nford.edu/helm/>.

- [3] The technology was invented at Google Brain in 2017, see Ashish Vaswani and others, ‘Attention Is All You Need’ (2017) 31st Conference on Neural Information Processing Systems <https://pa pers.neurips.cc/paper/7181-attention-is-all-you-need.pdf>. For a visual demonstration, see ‘Generative AI exists because of the transformer’ (Financial Times, 12 September 2023) <https://ig.ft.com/generative-ai/>.

- [4] Thibault Schrepel, ‘Generative AI, pyramids and legal institutionalism’ (2023) 2023/4 Concurrences art. N° 113961.

- [5] John Roach, ‘How Microsoft’s bet on Azure Unlocked a n AI Revolution’ (Microsoft News, 13 March 2023) <https://news.microsoft.com/source/features/ai/how-microsofts-bet-on-azure-unlocked-an-ai-revolution/>.

- [6] Michael Schade, ‘How ChatGPT and Our Language Models Are Developed’ (OpenAI) <https://help.openai.com/en/articles/7842364-how-chatgpt-and-our-language-models-are-developed>.

- [7] See <https://platform.openai.com/docs/introduction>.

- [8] Annabelle Gawer, ‘Digital Platforms and Ecosystems: Remarks on the Dominant Organizational Forms of the Digital Age’ (2022) 24 Innovation 110. The terminology goes back to James F Moore, ‘Predators and Prey: A New Ecology of Competition’ (1993) 71 Harvard Business Review 75.

- [9] Michael Jacobides, Carmelo Cennamo and Annabelle Gawer, ‘Towards a Theory of Ecosystems’ (2018) 39 Strategic Management Journal 2255.

- [10] Kyle Wiggers, ‘Microsoft invests billions more dollars in OpenAI, extends partnership’ (TechCrunch, 23 January 2023) <https://techcrunch.com/2023/01/23/microsoft-invests-billions-more-dollars-in-openai-extends-partnership/>.

- [11] ‘Meta and Microsoft Introduce the Next Generation of Llama’ (Meta, 18 July 2023) <https://about.fb.com/news/2023/07/llama-2/>.

- [12] ‘AI Alliance Launches as an International Community of Leading Technology Developers, Researchers, and Adopters Collaborating Together to Advance Open, Safe, Responsible AI’ (Meta, 4 December 2023) <https://ai.meta.com/blog/ai-alliance/>.

- [13] See <https://www.crunchbase.com/organization/anthropic/signals_and_news>.

- [14] See Dina Bass, ‘Microsoft Strung Together Tens of Thousands of Chips in a Pricey Supercomputer for OpenAI’ (Bloomberg, 13 March 2023) <https://www.bloomberg.com/news/articles/2023-03-13/microsoft-built-an-expensive-supercomputer-to-power-openai-s-chatgpt>.

- [15] Adam Brandenburger and Barry Nalebuff, Co-opetition: A Revolution Mindset that Combines Competition and Cooperation (Crown Business 1996).

- [16] Dan Milmo, ‘Google says new AI model Gemini outperforms ChatGPT in most tests’ (The Guardian, 6 December 2023) <https://www.theguardian.com/technology/2023/dec/06/google-new-ai-model-gemini-bard-upgrade>. Google’s victory lap did not last long as it turned out the Gemini demo was fabricated, see Benj Edwards, ‘Google’s best Gemini AI demo video was fabricated’ (Ars Technica, 8 December 2023) <https://arstechnica.com/information-technology/2023/12/google-admits-it-fudged-a-gemini-ai-demo-video-which-critics-say-misled-viewers/>.

- [17] Competition & Markets Authority, ‘AI Foundation Models’ (Initial Report, 18 September 2023); Autorida de da Concorrência, ‘Competition and Generative Artificial Intelligence’ (Issues Paper, 6 November 2023); European Commission, ‘Commission launches calls for contributions on competition in virtual worlds and generative AI’ (press release, 9 January 2024) IP/24/85.

- [18] Luca Bertuzzi, ‘MEPs call to ramp up Big Tech enforcement in competition review’ (Euractiv, 5 December 2024) <https://www.euractiv.com/section/competition/news/meps-call-to-ramp-up-big-tech-enforcement-in-competition-review/>.

- [19] Ben Thompson, ‘Windows and the AI Platform Shift’ (Stratechery, 24 May 2023) <https://stratechery.com/2023/windows-and-the-ai-platform-shift/>.

- [20] Ron Amadeo, ‘Google’s Iron Grip on Android: Controlling Open Source by any Means Necessary’ (Ars Technica, 21 October 2013) <https://arstechnica.com/gadgets/2018/07/googles-iron-grip-on-android-controlling-open-source-by-any-means-necessary/> and Google Android (Case AT.40099) Commission Decision of 18 July 2018.

- [21] Competition & Markets Authority, ‘AI Foundation Models’ (Initial Report, 18 September 2023) 3.102–3.108.

- [22] This is not necessarily painless: it requires self-cannibalization (i.e., displacing your own products with newer ones), as tech executives know well, see, e.g., Walter Isaacson, Steve Jobs (Abacus 2015) 376 (‘If you don’t cannibalize yourself, someone else will.’). This process generally involves innovation.

- [23] Competition & Markets Authority, ‘CMA seeks views on Microsoft’s partnership with OpenAI’ (press release, 8 December 2023) <https://www.gov.uk/government/news/cma-seeks-views-on-microsofts-partnership-with-openai>.

- [24] We refer here to disruptive innovation in the meaning of Clayton Christensen, The Innovator’s Dilemma: When New Technologies Cause Great Firms to Fail (Harvard Business Review Press 1997). Disruptive innovation occurs when smaller company with fewer recourses challenge incumbents by targeting the needs in low-end market segments, typically overlooked or underserved by incumbents who focus on improving their offerings for profitable customers. Over time, as these more affordable solutions improve in performance and quality, the smaller companies capture larger market segments and challenge incumbents. See for further clarification, Clayton M. Christensen, Michael E. Raynor, and Rory McDonald, ‘What is Disruptive Innovation?’ (2015) Harvard Business Review.

- [25] On GenAI as a sustaining innovation (using Christensen’s definition), see Ben Thompson, ‘AI and the Big Five,’ (Stratechery, 9 January 2023) <https://stratechery.com/2023/ai-and-the-big-five/>. In this sense, sustaining innovation refers to improving the performance of the incumbent’s product in the eyes of existing customers.

- [26] Google Search (Shopping) (Case AT.39740) Commission Decision of 27 June 2017.

- [27] See Friso Bostoen and David van Wamel, ‘Antitrust Remedies: From Caution to Creativity’ (2024) 14 Journal of European Competition Law & Practice 540.

- [28] Hasan Chowdhury, ‘A minor ChatGPT update is a warning to founders: Big Tech can blow up your startup at any time’ (Business Insider, 31 October 2023) <https://www.businessinsider.com/openai-chatgpt-pdfs-ai-startups-wrappers-2023-10>.

- [29] Peter Picht, ‘ChatGPT, Microsoft and Competition Law – Nemesis or Fresh Chance for Digital Markets Enforcement?’ (2023) <https://ssrn.com/abstract=4514311>.

- [30] David Teece, ‘Next-Generation Competition: New Concepts for Understanding How Innovation Shapes Competition and Policy in the Digital Economy’ (2012) 9/1 Journal of Law, Economics & Policy 97.

- [31] Regulation (EU) 2022/1925 of the European Parliament and of the Council on contestable and fair markets in the digital sector and a mending Directives (EU) 2019/1937 and (EU) 2020/1828 [2022] OJ L265/1, a rt 3(1).

- [32] The same distinction can be found in OECD, ‘The Role of Innovation in Competition Enforcement, OECD Competition Policy Roundtable Background Note’ (2023) DAF/COMP(2023)12 paras 3 and 4 <https://www.oecd.org/daf/competition/the-role-of-innovation-in-competition-enforcement-2023.pdf> (competition-driven markets, requiring an incentives-based approach, and innovation-driven markets, requiring an impact-based approach).

- [33] Thibault Schrepel and Alex Pentland, ‘Competition Between AI Foundation Models’ (2023) 1 MIT Connection Science Working Paper 1, 16.

- [34] It must be recognized that this only applies for symmetrical regulation.

- [35] In line with this, Bill Gates has set higher expectations for innovations in cost and error reductions rather than improved performance for GPT-5. See Matthias Bastian, ‘Bill Gates does not expect GPT-5 to be much better than GPT-4’ (The Decoder, 21 October 2023) <https://the-decoder.com/bill-gates-does-not-expect-gpt-5-to-be-much-better-than-gpt-4/>.

- [36] Iulia Turc et al., ‘Well-Read Students Learn Better: On the Importance of Pre-training Compact Models’ (2019) <https://arxiv.org/pdf/1908.08962.pdf>; Timo Schick and Hinrich Schütze, ‘It’s Not Just Size That Matters: Small Language Models Are Also Few-Shot Learners’ (2021) <https://arxiv.org/pdf/2009.07118.pdf>; Yao Fu et al., ‘Specializing Smaller Language Models Towards Multi-Step Reasoning’ (2023). For an overview, see Zian Wang, ‘The Underdog Revolution: How Smaller Language Models Can Outperform LLMs’ (Deepgram, 17 May 2023) <https://deepgram.com/learn/the-underdog-revolution-how-smaller-language-models-outperform-llms>.

- [37] Jordan Hoffmann et al., ‘Training Compute-Optimal Large Language Models’ (2022) <https://arxiv.org/pdf/2203.15556.pdf>.

- [38] Hugo Touvron, ‘LLaMA: Open and Efficient Foundation Language Models’ (2023) <https://arxiv.org/abs/2302.13971>.

- [39] The MMLU (Massive Multi-task Language Understanding) benchmark is a way of measuring AI models. Whereas GPT-3 scores a 53.9% accuracy, LLaMA scores 57.8% and Chinchilla scores 67.5%. See <https://paperswithcode.com/sota/multi-task-language-understanding-on-mmlu>.

- [40] Phi-2 outperforms LLaMA on Big Bench Hard, commonsense reasoning, language understanding, math, and coding. See Mojan Javaheripi and Sébasatien Bubeck, ‘Phi-2: The surprising power of small language models’ (Microsoft Research Blog, 12 December 2023) <https://www.microsoft.com/en-us/research/blog/phi-2-the-surprising-power-of-small-language-models/>. However, Phi-2 has an accuracy score of 56.7% according to the MMLU benchmark, slightly lower than LLaMA’s 57.8%.

- [41] Rohan Taori et al., ‘Alpaca: A Strong, Replicable Instruction-Following Model’ (2023) <https://crfm.stanford.edu/2023/03/13/alpaca.html>. Authors conducted a human evaluation on the inputs from the self-instruct evaluation set and found that Alpaca won 90 versus 89 comparisons against text-davinci-003.

- [42] The memo is available at <https://www.semianalysis.com/p/google-we-have-no-moat-and-neither>.

- [43] ‘A Pro-Innovation Approach to AI Regulation’ (United Kingdom, Department for Science, Innovation and Technology and Office for Artificial Intelligence 2023) CP 815 57.

- [44] ‘Microsoft, Anthropic, Google, and OpenAI launch Frontier Model Forum’ (Microsoft, 26 July 2023) <https://blogs.microsoft.com/on-the-issues/2023/07/26/anthropic-google-microsoft-openai-launch-frontier-model-forum/>.